Chapter 4 Training / Test Data

https://www.stat.berkeley.edu/~aldous/157/Papers/shmueli.pdf

Predictive power is assessed using metrics computedfrom a holdout set or using cross-validation (Stone,1974; Geisser,1975)

Testing the procedure on the data that gave it birth is almost certain to overestimate performance” (Mosteller and Tukey,1977).

Let’s extend this idea of training and test splits. Remember, our goal is to generate a robust model that better estimates out-of-sample error. We can do this by resampling our data set in a way that allows us to learn from the data but not so much so that it follows the data set too closely.

We can take a single data set and partition / split it into a number of train / test subsets. We just did that in the earlier section but we only did it once. If we do this a number of times we hope we are training our model more effectively.

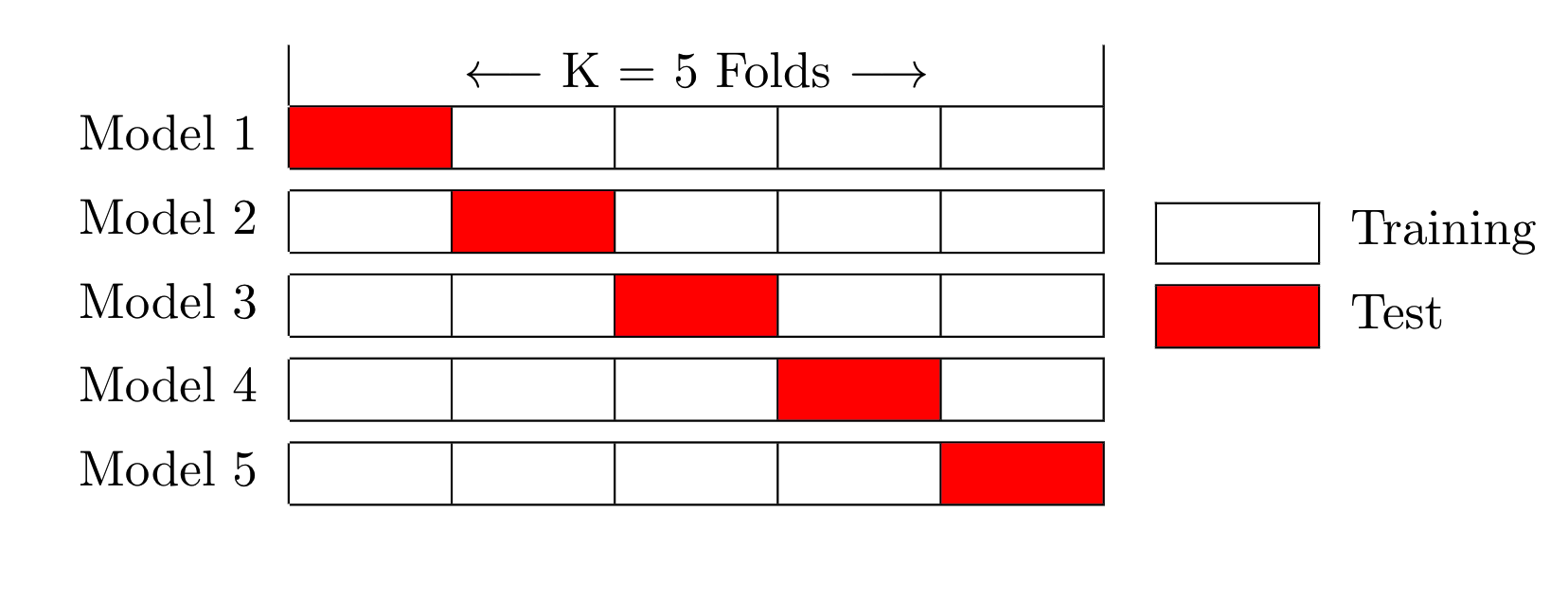

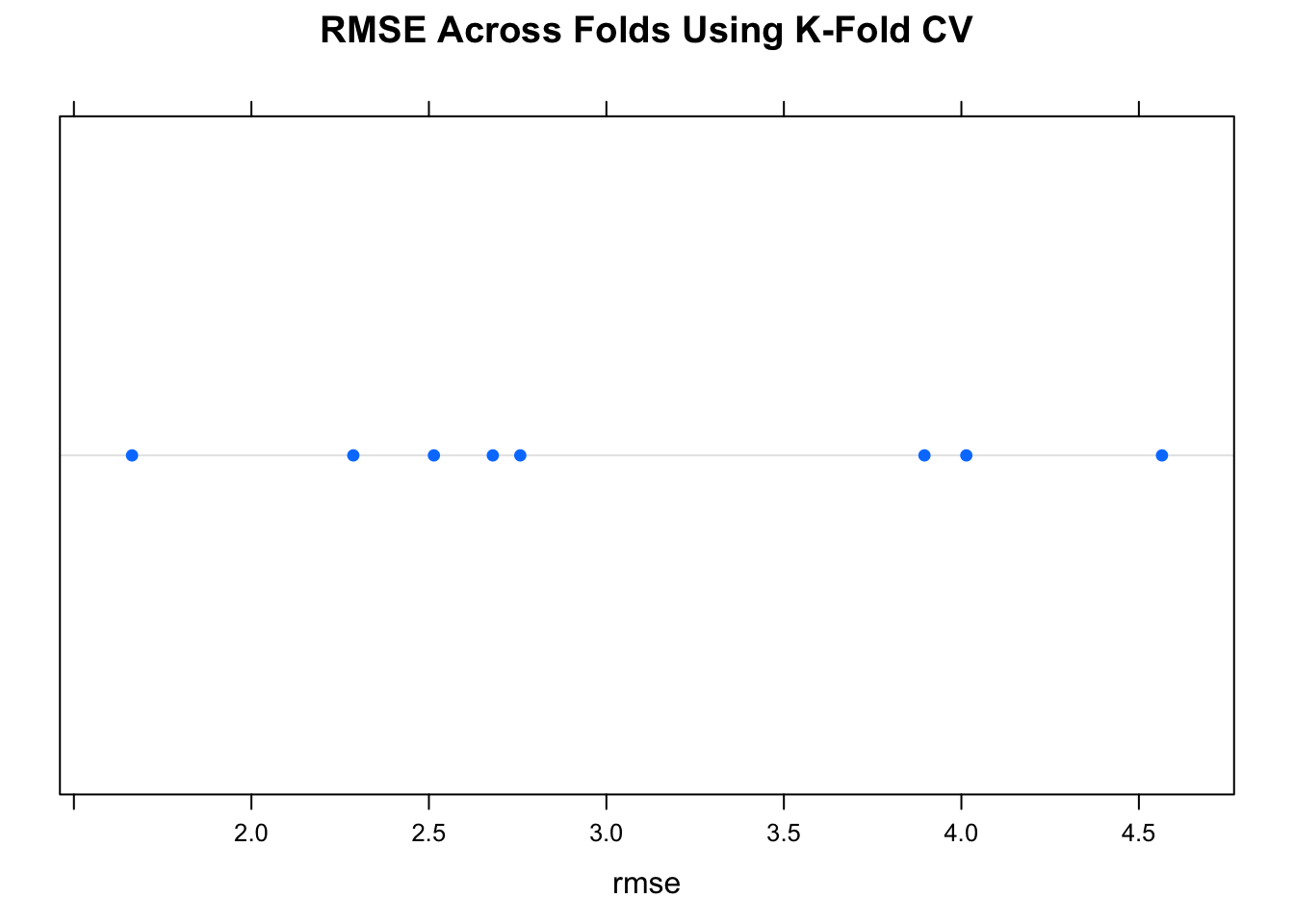

What would the RMSE look like if we created say K number of subsets of the data frame and selectively held out each of the K subsets, built a model on the combined remaining subsets, and then tested the model on the holdout ? We would then average the RMSE to get an idea of its variation. The series of sequential steps would be as follows:

Subset the data frame into k groups

For each subset:

Consider the subset as a "hold out"" or test data set

Combine the remaining subsets as a training data set

Fit a model on the combined training set

Evaluate the model using the holdout test set

Save the evaluation score (e.g. RNSE)

Summarize evaluation score (e.g. mean of RMSE)This is called K-Fold Cross Validation. A general view of this procedure is depicted in the following figure which shows a 5 Fold scenario.

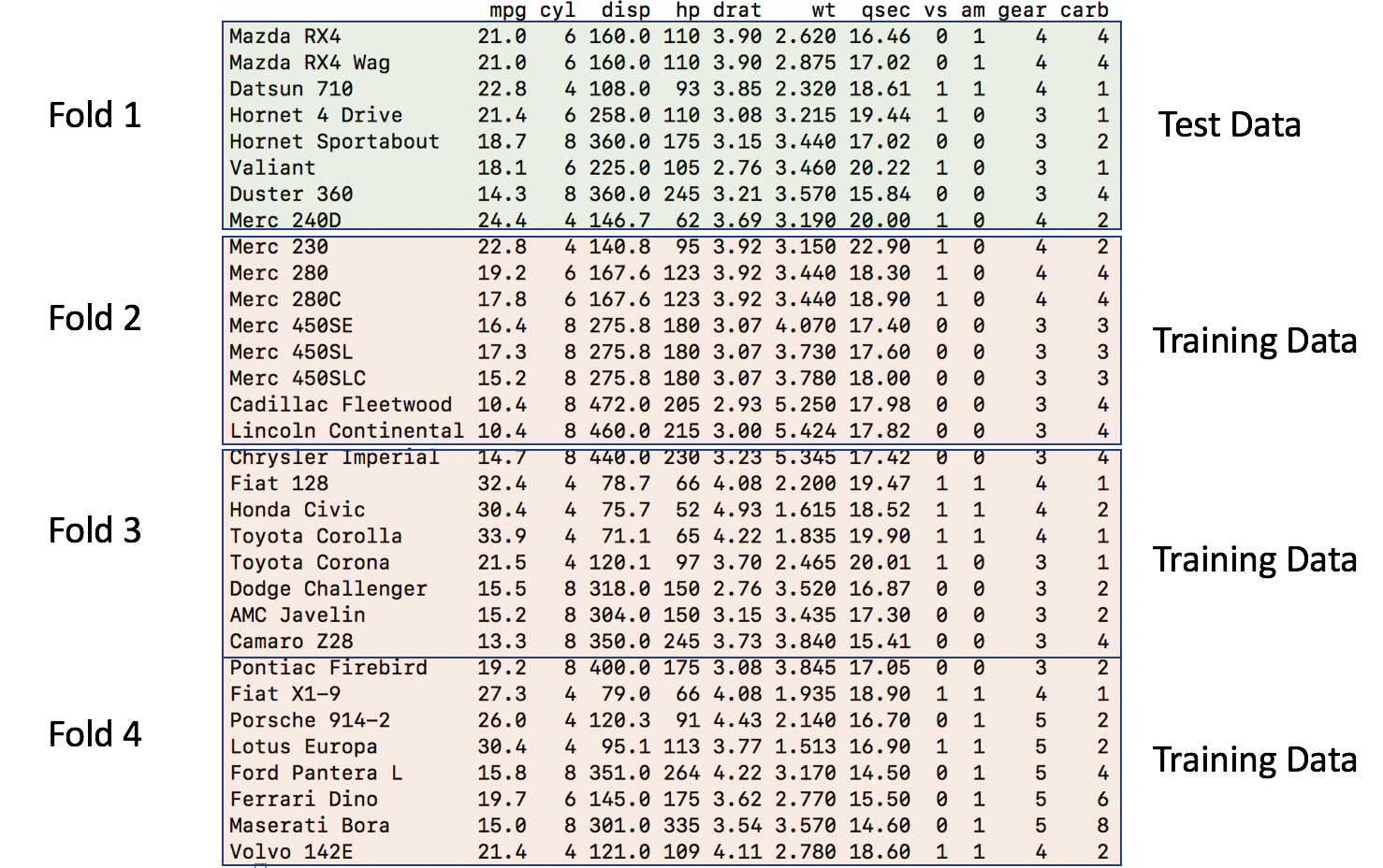

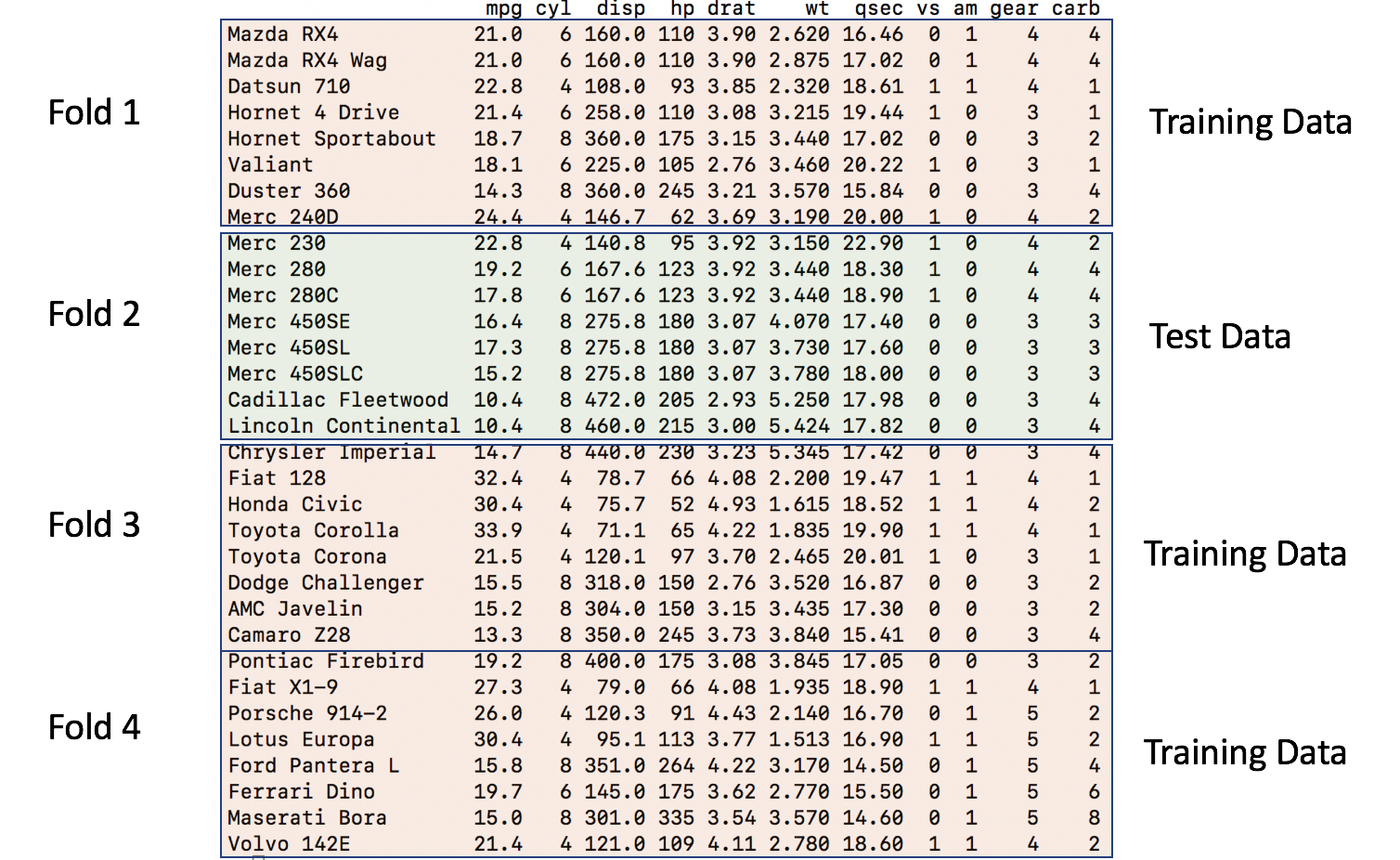

Now, here is the general idea in illustrated form relative to mtcars. Assume we want 4 folds. We would divide the data frame into 4 folds of 8 records each. The first model would be built using Fold 1 as the holdout / test data set after first combining Folds 2,3 and 4 into a training set set

So the second iteration would then take the second fold as the holdout / test data frame and combine Folds 1,3, and 4 into a training data frame.

# Generates Some Folds

num_of_folds <- 4

# This generates 8 groups of 4 indices such that each

# group has unique observations. No observation is used

# more than once - although we could use bootstrapping

set.seed(321)

folds <- split(sample(1:nrow(mtcars)),1:num_of_folds)

# We should have 32 indicies across the 8 groups

sum(sapply(folds,length))## [1] 32Check out the folds to get a better understanding of what is going on. We generated a list that has 8 elements each of which holds a 4 element vector corresponding to indices for records in the mtcars data frame.

## $`1`

## [1] 22 25 4 23 20 26 8 12

##

## $`2`

## [1] 18 24 15 9 21 14 3 1

##

## $`3`

## [1] 29 16 11 2 27 28 6 19

##

## $`4`

## [1] 13 17 31 32 7 10 5 30Again, each list element has the indices of eight unique observations from the data frame. We have four folds with eight elements each for a total of 32 numbers corresponding to row numbers from the mtcars data frame.

## mpg cyl disp hp drat wt qsec vs am gear carb

## Dodge Challenger 15.5 8 318.0 150 2.76 3.520 16.87 0 0 3 2

## Pontiac Firebird 19.2 8 400.0 175 3.08 3.845 17.05 0 0 3 2

## Hornet 4 Drive 21.4 6 258.0 110 3.08 3.215 19.44 1 0 3 1

## AMC Javelin 15.2 8 304.0 150 3.15 3.435 17.30 0 0 3 2

## Toyota Corolla 33.9 4 71.1 65 4.22 1.835 19.90 1 1 4 1

## Fiat X1-9 27.3 4 79.0 66 4.08 1.935 18.90 1 1 4 1

## Merc 240D 24.4 4 146.7 62 3.69 3.190 20.00 1 0 4 2

## Merc 450SE 16.4 8 275.8 180 3.07 4.070 17.40 0 0 3 34.1 Cross Fold Validation

To implement the cross validation, we will create a processing loop that will execute once for each of the 8 folds. During each execution of the loop we will create a model using data combined from all folds except the fold corresponding to the current loop number (e.g, 1, 2, .. 4).

Once the model is built we then test it on the fold number corresponding to the current loop number.

So now we can create some lists to contain the models that we make along withe the associated predictions, errors and computed RMSE. We we can inspect any of the intermediate results after the fact to validate our work or look more closely at any specific result.

# Next we setup some blank lists to stash results

folddf <- list() # Contains folds

modl <- list() # Hold each of the K models

predl <- list() # Hold rach of the K predictions

rmse <- list() # Hold the computed rmse for a given model

# Now, for each of the 8 subgroups of holdout data we will

# create a lda model based on all the data *except* the

# holdout group

for (ii in 1:length(folds)) {

# This list holds the actual model we create for each of the

# 10 folds

modl[[ii]] <- lm(formula = myform,

data = mtcars[-folds[[ii]],]

)

# This list will contain / hold the models build on the fold

predl[[ii]] <- predict(modl[[ii]],

newdata=mtcars[folds[[ii]],],

type="response")

# This list will hold the results of the confusion matrix

# function. This obkect will contain info on the

# accuracy, sensitivity/recall, specificity

# and so on for each model per fold

errors <- predl[[ii]]-mtcars[folds[[ii]],]$mpg

rmse[[ii]] <- sqrt(mean(errors^2))

}The above list structures allow us to drill down into any aspect of the models and predictions we have made for each of the 4 folds. More importantly we can see how well the model works against each of the individual holdout / test data sets. In the end, we just want to be able to look at the average RMSE across the folds. This gives us clues as to how good the model might perform against new data.

## [1] 3.046228## [1] 0.70719754.2 Create A Function To Automate Things

Since we have gone to the trouble of creating a loop structure to process the folds, we could easily turn this into a function to automate the splitting of the data frame across some arbitrary number of folds just to get an idea of how the RMSE looks for different numbers of folds.

We could even have our function accommodate different formula if we wanted but we won’t focus on that right now. You will soon discover that the caret package does these kinds of things for you but we aren’t quite there yet.

make_mtcars_model <- function(formula=myform, num_of_folds=8) {

folds <- split(sample(1:nrow(mtcars)),1:num_of_folds)

modl <- list()

predl <- list()

rmse <- list()

# Now, for each of the 10 subgroups of holdout data we will

# create a lda model based on all the data *except* the

# holdout group

for (ii in 1:length(folds)) {

# This list holds the actual model we create for each of the folds

modl[[ii]] <- lm(formula = myform,

data = mtcars[-folds[[ii]],]

)

# This list will contain / hold the models build on the fold

predl[[ii]] <- predict(modl[[ii]],

newdata=mtcars[folds[[ii]],],

type="response")

# Let's compute the RMSE and save it

errors <- predl[[ii]]-mtcars[folds[[ii]],]$mpg

rmse[[ii]] <- sqrt(mean(errors^2))

}

return(rmse=unlist(rmse))

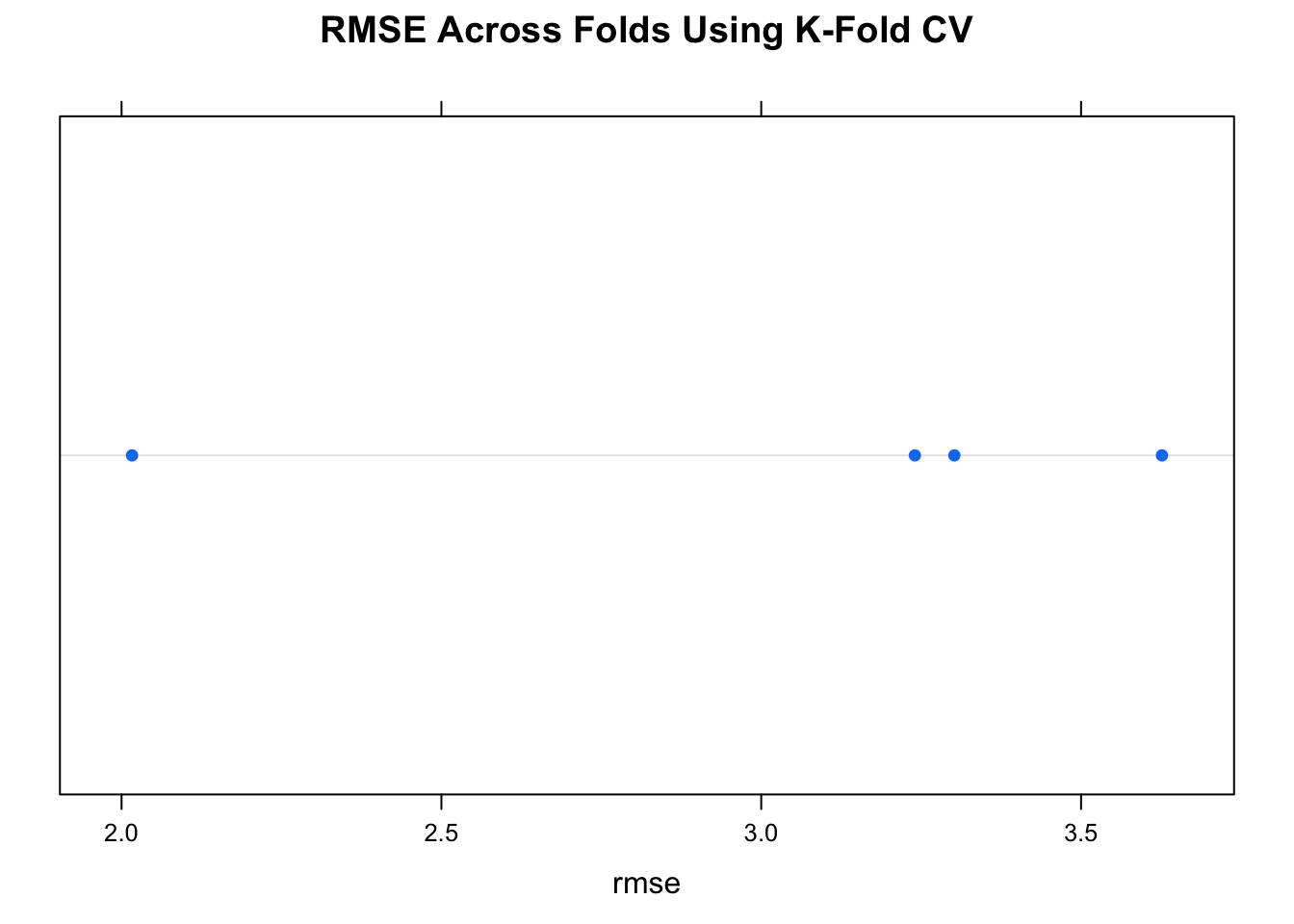

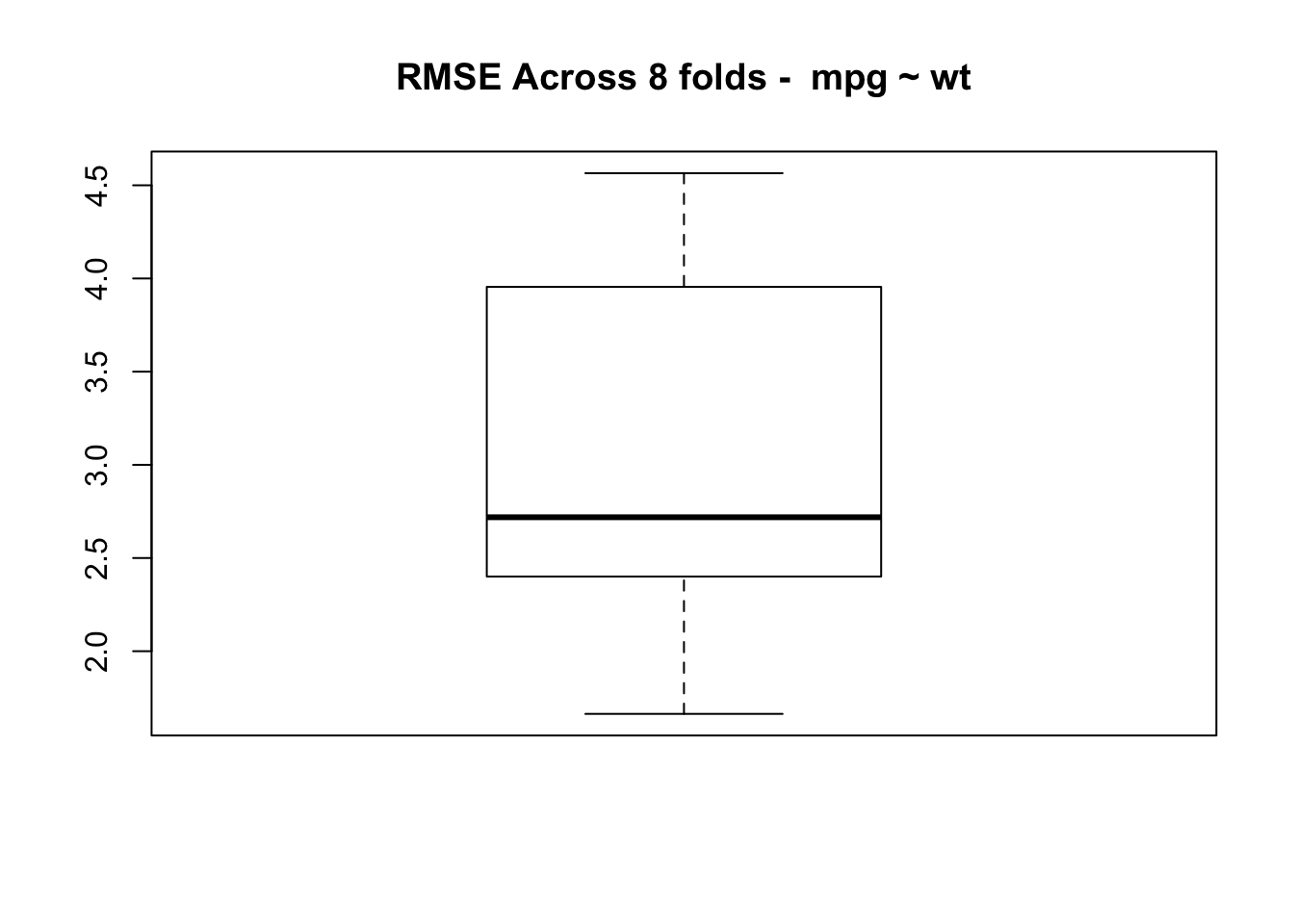

}Let’s look at the average RMSE across 8 folds.

num_of_folds <- 8

set.seed(321)

rmse <- make_mtcars_model(num_of_folds)

title <- paste("RMSE Across",num_of_folds,

"folds - ",as.character(deparse(myform)),sep=" ")

print(mean(rmse))## [1] 3.047164

## [1] 0.9967001

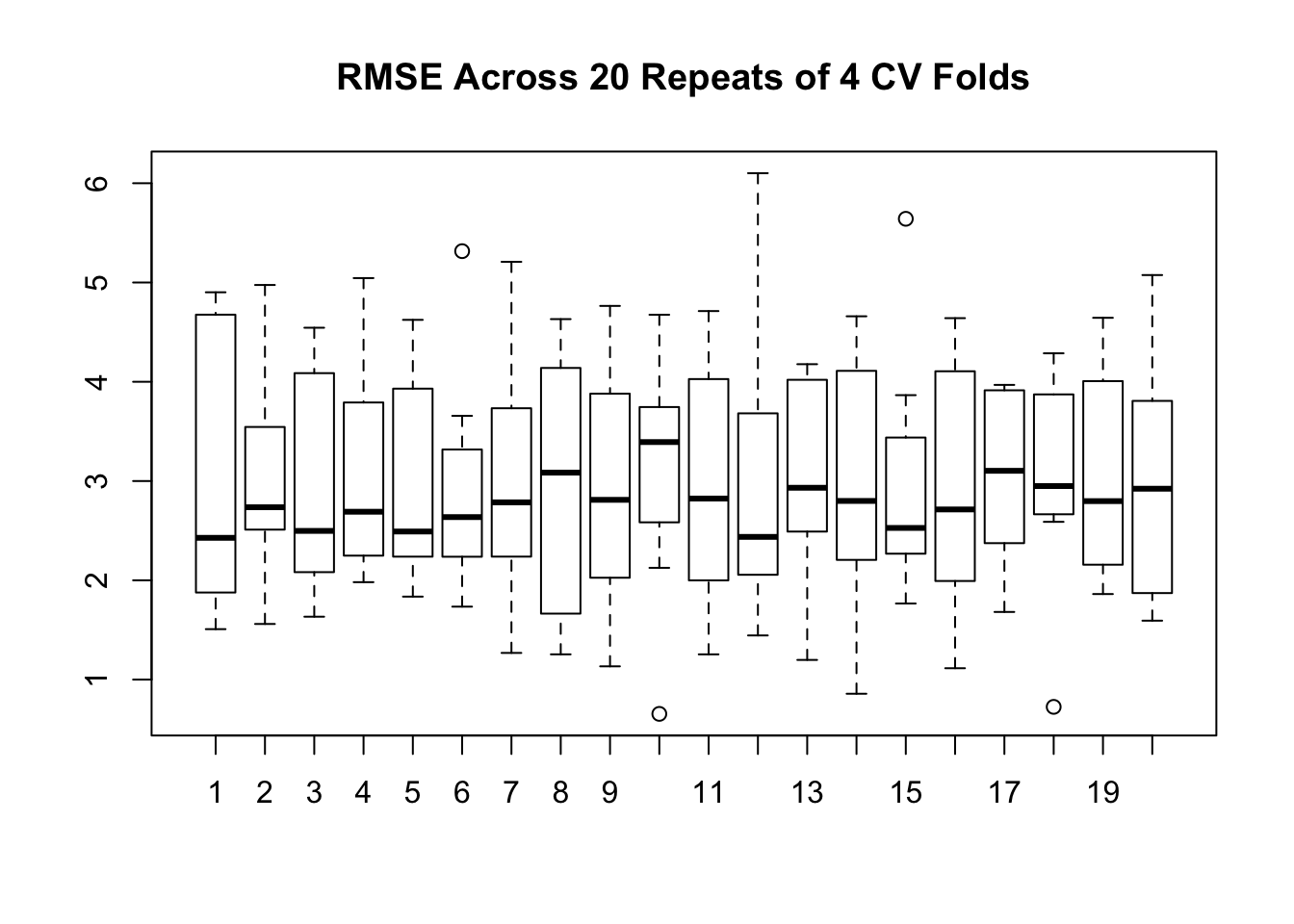

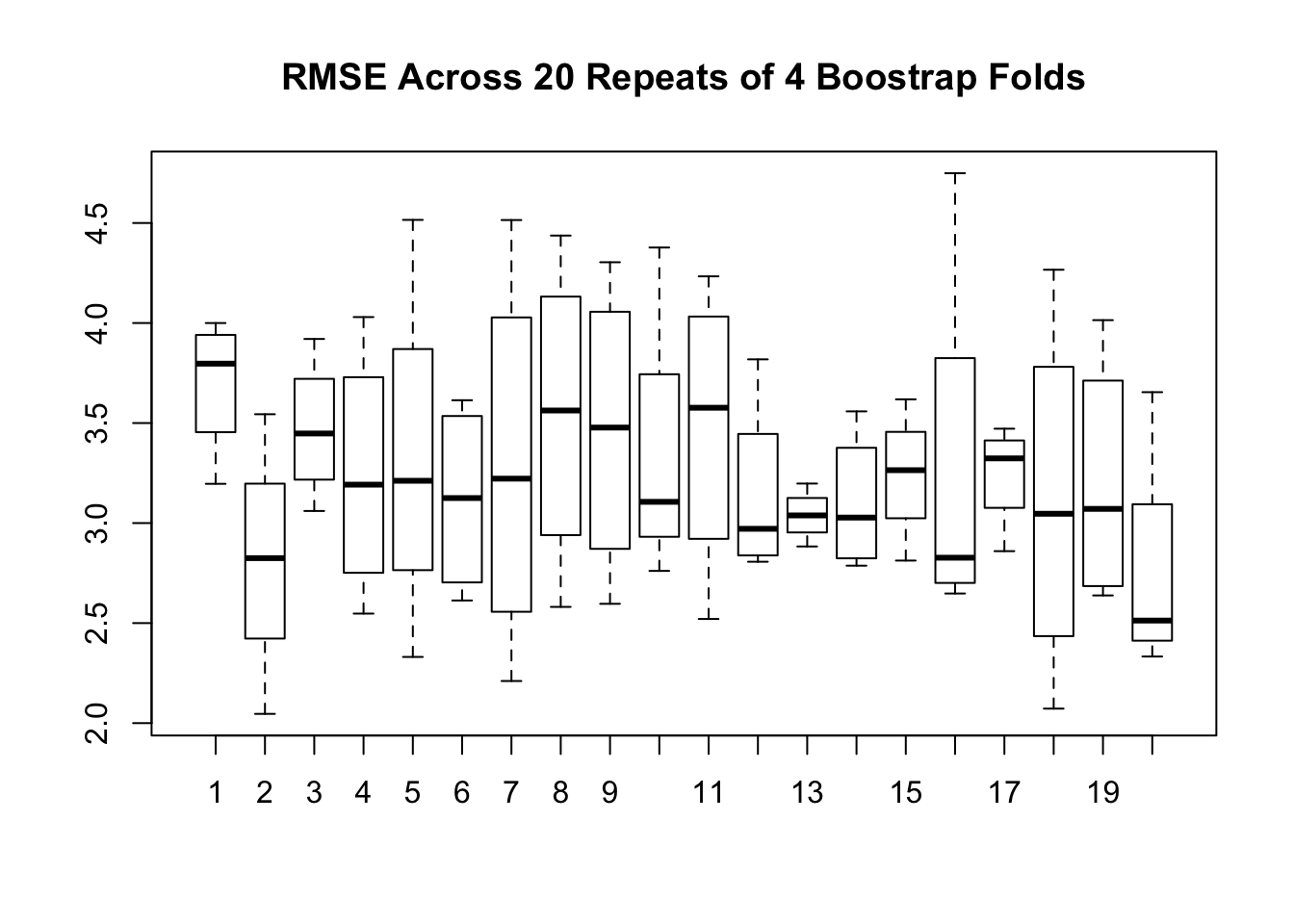

4.3 Repeated Cross Validation

Since we already have an existing function we can up the ante by repeating the cross validation. This will provide more data on how the RMSE might be distributed across multiple runs, each of which does Cross Fold validation. This example will repeat a 4 Fold Cross Validation , 20 times.

num_of_folds <- 4

# Just to be clear - here is what happens when we call the function

# once. We get back 4 RMSE values - one for each fold

set.seed(321)

(rmse <- make_mtcars_model(num_of_folds))## [1] 4.565091 3.895989 2.514073 2.757429 2.287045 2.680096 1.663681 4.013908# Now we repeat this some number of times - like 10. So we get back

# 80 RMSE values

repeated_cv_rmse <- sapply(1:20,make_mtcars_model)

boxplot(repeated_cv_rmse,

main="RMSE Across 20 Repeats of 4 CV Folds")

title <- paste("RMSE Across",num_of_folds,

"folds - ",as.character(deparse(myform)),sep=" ")

mean(as.vector(repeated_cv_rmse))## [1] 2.993835

## V1 V2 V3 V4

## Min. :1.508 Min. :1.560 Min. :1.633 Min. :1.981

## 1st Qu.:2.027 1st Qu.:2.535 1st Qu.:2.146 1st Qu.:2.360

## Median :2.428 Median :2.736 Median :2.498 Median :2.691

## Mean :3.046 Mean :3.015 Mean :2.939 Mean :3.061

## 3rd Qu.:4.581 3rd Qu.:3.342 3rd Qu.:4.087 3rd Qu.:3.538

## Max. :4.902 Max. :4.975 Max. :4.544 Max. :5.045

## V5 V6 V7 V8

## Min. :1.836 Min. :1.735 Min. :1.269 Min. :1.254

## 1st Qu.:2.269 1st Qu.:2.310 1st Qu.:2.311 1st Qu.:1.746

## Median :2.492 Median :2.637 Median :2.785 Median :3.085

## Mean :2.973 Mean :2.929 Mean :2.999 Mean :2.958

## 3rd Qu.:3.844 3rd Qu.:3.148 3rd Qu.:3.550 3rd Qu.:3.915

## Max. :4.624 Max. :5.316 Max. :5.208 Max. :4.631

## V9 V10 V11 V12

## Min. :1.133 Min. :0.6545 Min. :1.254 Min. :1.445

## 1st Qu.:2.213 1st Qu.:2.8133 1st Qu.:2.121 1st Qu.:2.198

## Median :2.811 Median :3.3927 Median :2.824 Median :2.437

## Mean :2.917 Mean :3.0968 Mean :2.958 Mean :2.987

## 3rd Qu.:3.617 3rd Qu.:3.6623 3rd Qu.:4.022 3rd Qu.:3.316

## Max. :4.764 Max. :4.6753 Max. :4.712 Max. :6.101

## V13 V14 V15 V16

## Min. :1.198 Min. :0.8574 Min. :1.766 Min. :1.114

## 1st Qu.:2.518 1st Qu.:2.2471 1st Qu.:2.298 1st Qu.:2.122

## Median :2.932 Median :2.7995 Median :2.528 Median :2.714

## Mean :3.033 Mean :2.9685 Mean :2.984 Mean :2.923

## 3rd Qu.:4.020 3rd Qu.:4.0352 3rd Qu.:3.225 3rd Qu.:3.968

## Max. :4.177 Max. :4.6596 Max. :5.642 Max. :4.641

## V17 V18 V19 V20

## Min. :1.681 Min. :0.7252 Min. :1.862 Min. :1.593

## 1st Qu.:2.465 1st Qu.:2.7033 1st Qu.:2.158 1st Qu.:1.967

## Median :3.103 Median :2.9494 Median :2.798 Median :2.923

## Mean :3.054 Mean :2.9983 Mean :3.054 Mean :2.984

## 3rd Qu.:3.904 3rd Qu.:3.8184 3rd Qu.:3.983 3rd Qu.:3.749

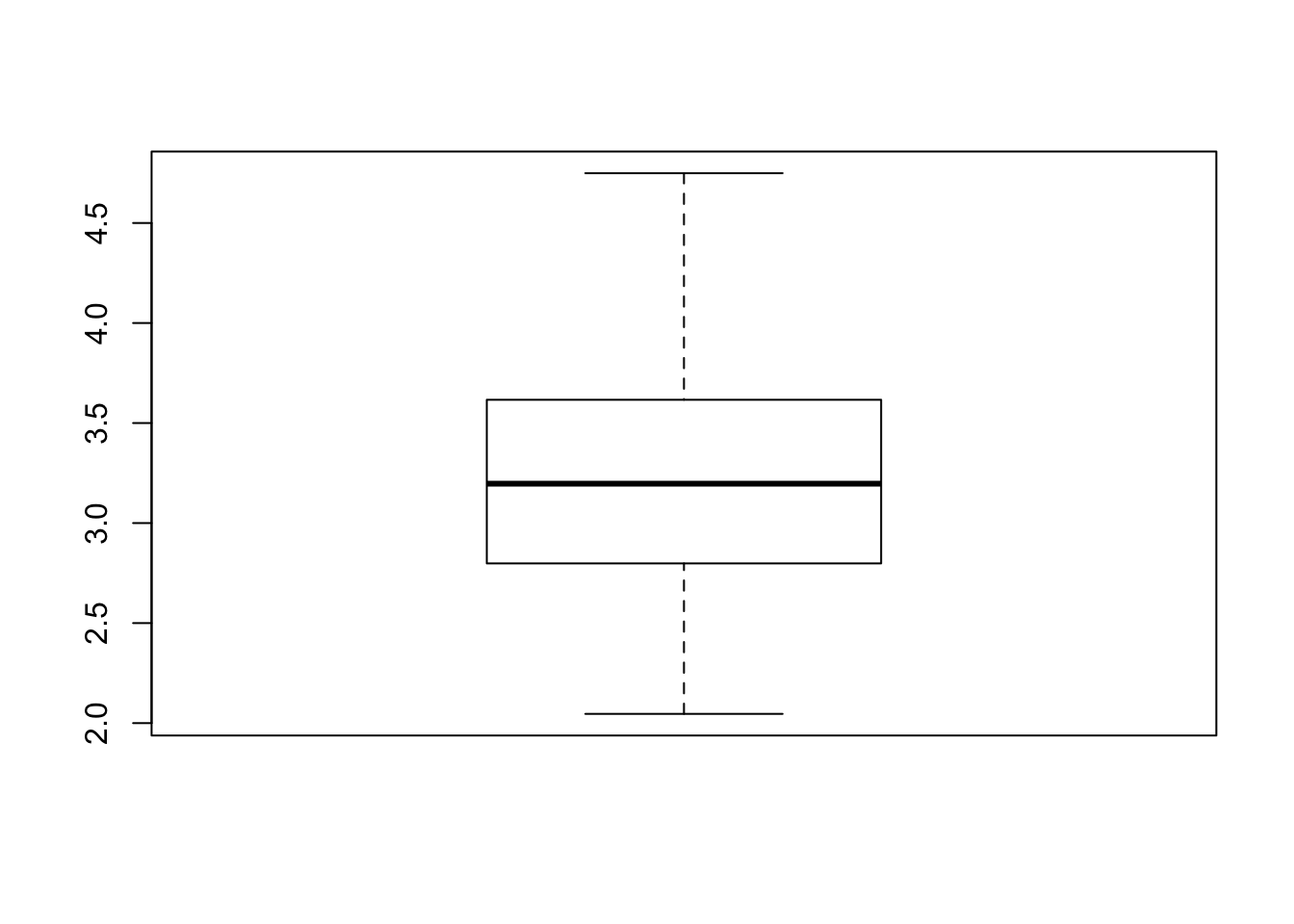

## Max. :3.969 Max. :4.2868 Max. :4.645 Max. :5.0754.4 Bootstrap

An alternative to K-Fold Cross Validation is to use the bootstrap sampling approach which will produce training data sets the same size as the original data set although some observations might be repeated as the sampling process is done with replacement. The observations that do not appear in each of the training sets are then used as a test set. These observations are known as “out of bag samples”. We’ll make a function to do bootstrap sampling.

make_mtcars_boot <- function(formula=myform, num_of_folds=8) {

modl <- list()

predl <- list()

rmse <- list()

# Now, for each of the 10 subgroups of holdout data we will

# create a lda model based on all the data *except* the

# holdout group

for (ii in 1:length(folds)) {

training_boot_idx <- sample(1:nrow(mtcars),replace=TRUE)

test_boot_idx <- !(1:32 %in% training_boot_idx)

# This list holds the actual model we create for each of the folds

modl[[ii]] <- lm(formula = myform,

data = mtcars[training_boot_idx,]

)

# This list will contain / hold the models build on the fold

predl[[ii]] <- predict(modl[[ii]],

newdata=mtcars[test_boot_idx,],

type="response")

# Let's compute the RMSE and save it

errors <- predl[[ii]]-mtcars[test_boot_idx,]$mpg

rmse[[ii]] <- sqrt(mean(errors^2))

}

return(rmse=unlist(rmse))

}num_of_folds <- 8

# Just to be clear - here is what happens when we call the function

# once. We get back 8 RMSE values - one for each fold

set.seed(321)

(rmse <- make_mtcars_boot(num_of_folds))## [1] 2.441587 3.733133 3.216098 2.544607# Now we repeat this some number of times - like 10. So we get back

# 80 RMSE values

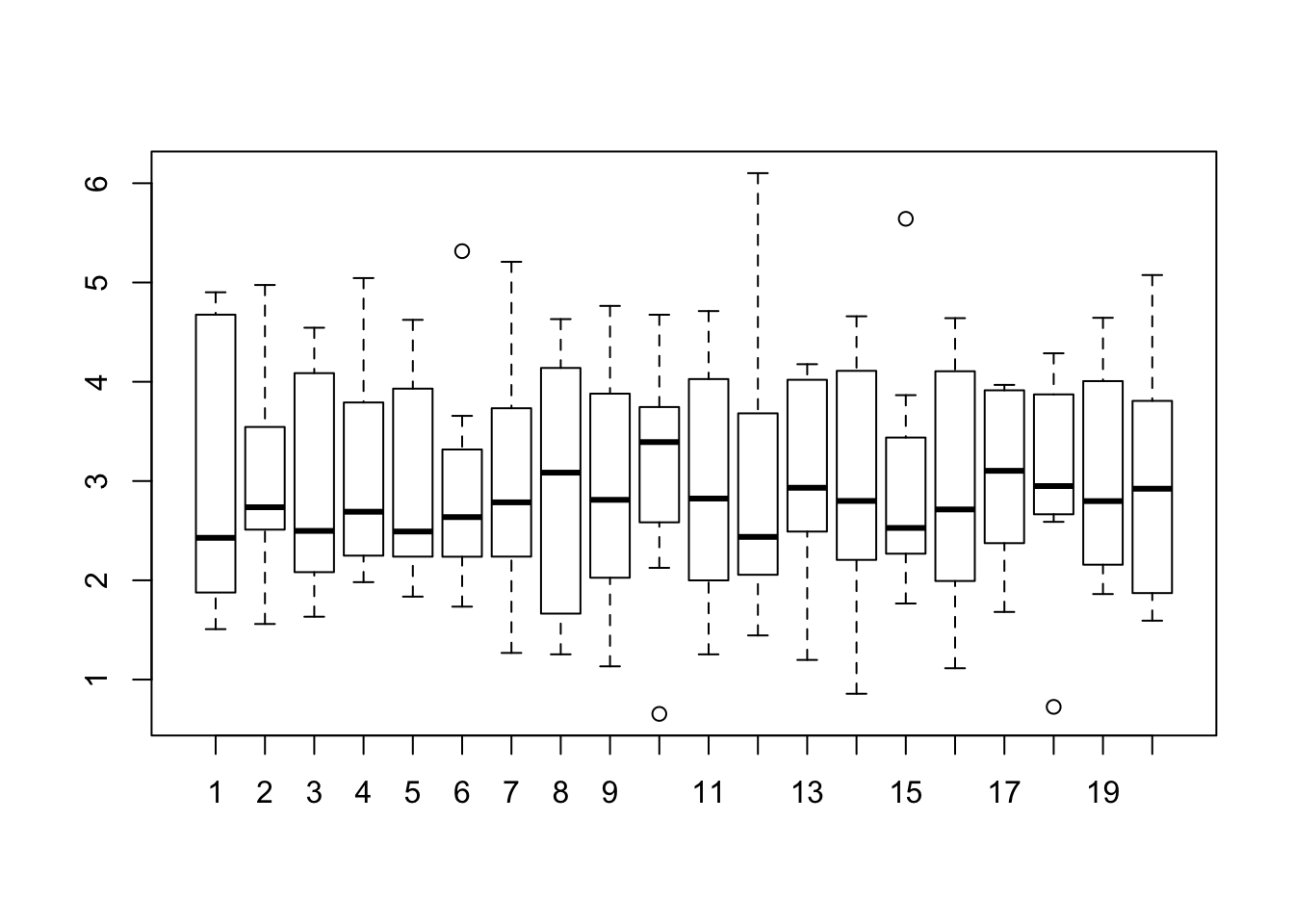

repeated_rmse <- sapply(1:20,make_mtcars_boot)

boxplot(repeated_rmse,main="RMSE Across 20 Repeats of 4 Boostrap Folds")

title <- paste("RMSE Across",num_of_folds,

"folds - ",as.character(deparse(myform)),sep=" ")

boot_repeated_rmse <- as.vector(repeated_rmse)

boxplot(boot_repeated_rmse)

# How does the RMSE from the boostrap approach compare to the

# K-Fold CV approach ?

print("Summary of Bootstrap RMSE")## [1] "Summary of Bootstrap RMSE"## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 2.046 2.799 3.197 3.242 3.615 4.749## [1] "Summary of CV"## V1 V2 V3 V4

## Min. :1.508 Min. :1.560 Min. :1.633 Min. :1.981

## 1st Qu.:2.027 1st Qu.:2.535 1st Qu.:2.146 1st Qu.:2.360

## Median :2.428 Median :2.736 Median :2.498 Median :2.691

## Mean :3.046 Mean :3.015 Mean :2.939 Mean :3.061

## 3rd Qu.:4.581 3rd Qu.:3.342 3rd Qu.:4.087 3rd Qu.:3.538

## Max. :4.902 Max. :4.975 Max. :4.544 Max. :5.045

## V5 V6 V7 V8

## Min. :1.836 Min. :1.735 Min. :1.269 Min. :1.254

## 1st Qu.:2.269 1st Qu.:2.310 1st Qu.:2.311 1st Qu.:1.746

## Median :2.492 Median :2.637 Median :2.785 Median :3.085

## Mean :2.973 Mean :2.929 Mean :2.999 Mean :2.958

## 3rd Qu.:3.844 3rd Qu.:3.148 3rd Qu.:3.550 3rd Qu.:3.915

## Max. :4.624 Max. :5.316 Max. :5.208 Max. :4.631

## V9 V10 V11 V12

## Min. :1.133 Min. :0.6545 Min. :1.254 Min. :1.445

## 1st Qu.:2.213 1st Qu.:2.8133 1st Qu.:2.121 1st Qu.:2.198

## Median :2.811 Median :3.3927 Median :2.824 Median :2.437

## Mean :2.917 Mean :3.0968 Mean :2.958 Mean :2.987

## 3rd Qu.:3.617 3rd Qu.:3.6623 3rd Qu.:4.022 3rd Qu.:3.316

## Max. :4.764 Max. :4.6753 Max. :4.712 Max. :6.101

## V13 V14 V15 V16

## Min. :1.198 Min. :0.8574 Min. :1.766 Min. :1.114

## 1st Qu.:2.518 1st Qu.:2.2471 1st Qu.:2.298 1st Qu.:2.122

## Median :2.932 Median :2.7995 Median :2.528 Median :2.714

## Mean :3.033 Mean :2.9685 Mean :2.984 Mean :2.923

## 3rd Qu.:4.020 3rd Qu.:4.0352 3rd Qu.:3.225 3rd Qu.:3.968

## Max. :4.177 Max. :4.6596 Max. :5.642 Max. :4.641

## V17 V18 V19 V20

## Min. :1.681 Min. :0.7252 Min. :1.862 Min. :1.593

## 1st Qu.:2.465 1st Qu.:2.7033 1st Qu.:2.158 1st Qu.:1.967

## Median :3.103 Median :2.9494 Median :2.798 Median :2.923

## Mean :3.054 Mean :2.9983 Mean :3.054 Mean :2.984

## 3rd Qu.:3.904 3rd Qu.:3.8184 3rd Qu.:3.983 3rd Qu.:3.749

## Max. :3.969 Max. :4.2868 Max. :4.645 Max. :5.075