Chapter 6 Feature Importance

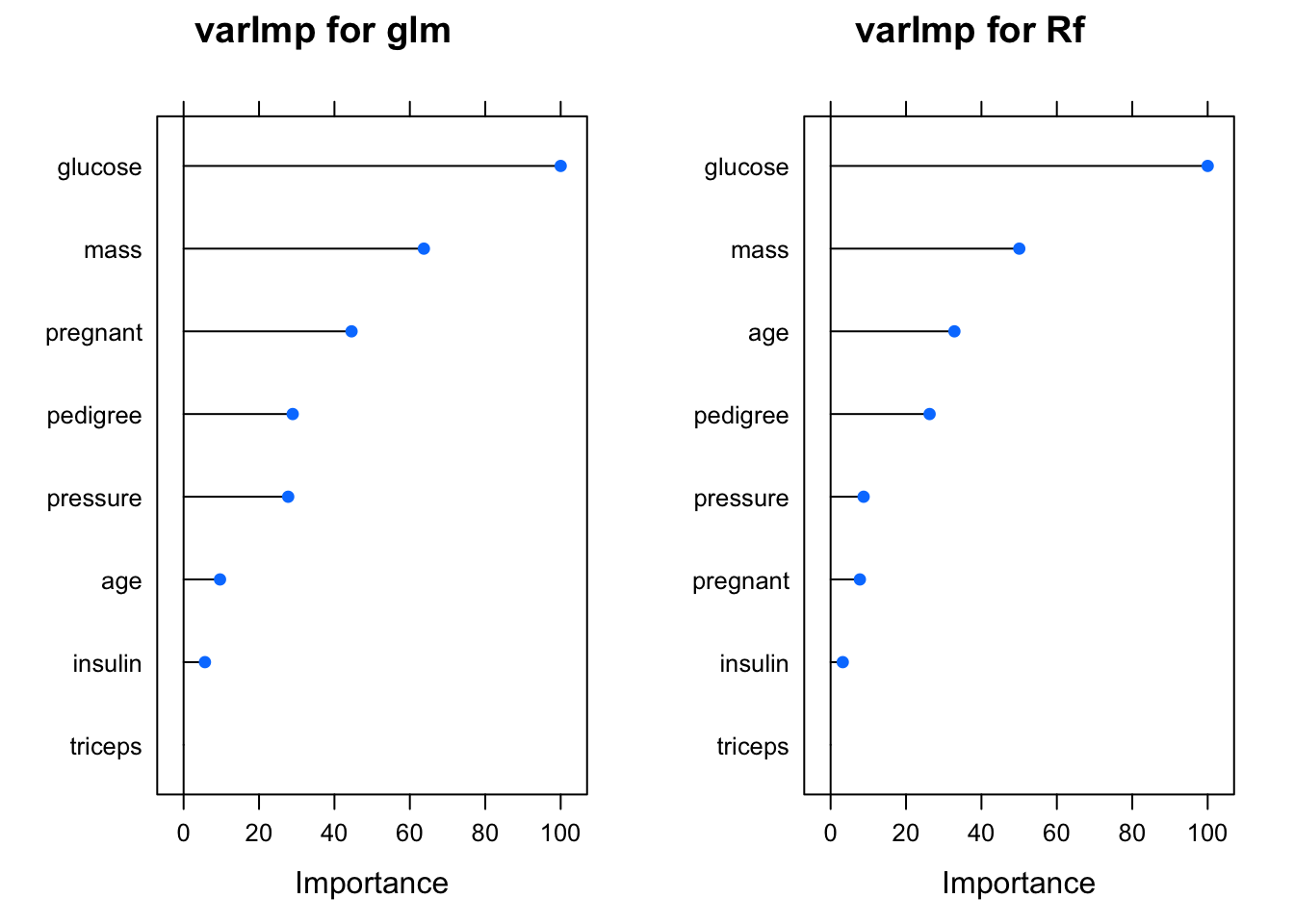

Another advantage of using the caret train function is that it provides a method to determine variable importance. This is useful when considering what features to include or not when building a model. If we summarize a given model, our myglm_caret model, we’ll see that some of our predictors are not significant.

We could use the varImp to use statistics generated by the specific modeling process itself. For more complex modeling techniques this winds up being very useful since digging into the model diagnostics can be daunting - although quite useful.

## glm variable importance

##

## Overall

## glucose 100.000

## mass 63.719

## pregnant 44.532

## pedigree 28.932

## pressure 27.731

## age 9.651

## insulin 5.656

## triceps 0.000If you wanted to see how the different models rates the significance of predictor variables then you can easily plot them.

library(gridExtra)

p1 <- plot(varImp(myglm_caret),main="varImp for glm")

p2 <- plot(varImp(myrf_caret),main="varImp for Rf")

grid.arrange(p1,p2,ncol=2)

6.0.1 Feature Elimination

The caret package also supports “recursive feature elimination” which automates the selection of optimal features. This can be controversial since such a process could work at the expense of important statistical considerations. However, it remains a tool in the Machine Learning toolbox.

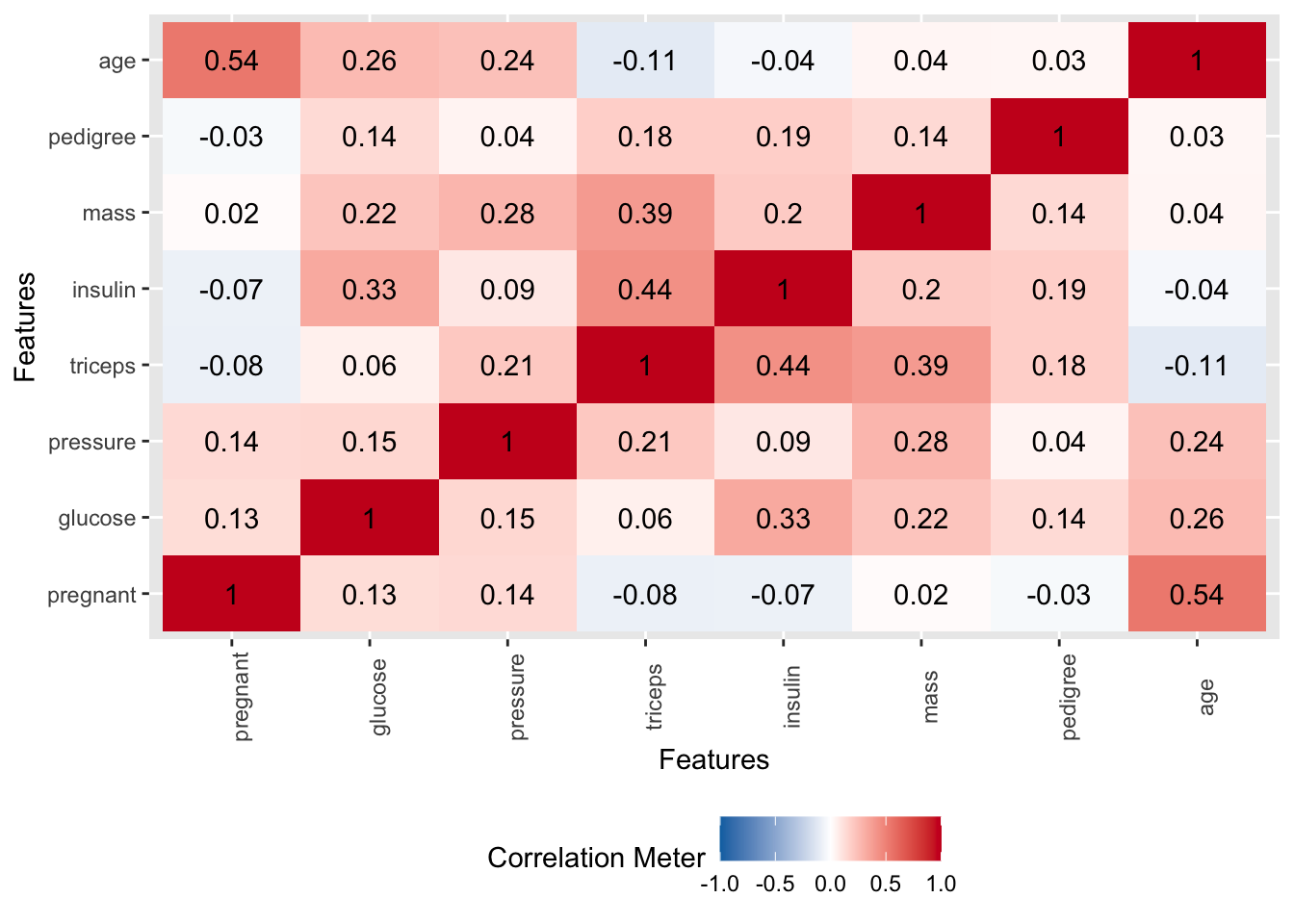

Let’s work though an example of this using caret functions. First, we’ll remove highly correlated predictor variables from consideration. We don’t really have a lot of highly correlated variables. It turns out that “age” is correlated with “pregnant” at a level of 0.54.

# find attributes that exceed some spcified threshold

highlyCorrelated <- findCorrelation(cor(pm[,1:8]),

cutoff=0.5,

names = TRUE)

# print indexes of highly correlated attributes

print(highlyCorrelated)## [1] "age"6.0.2 The rfe Function

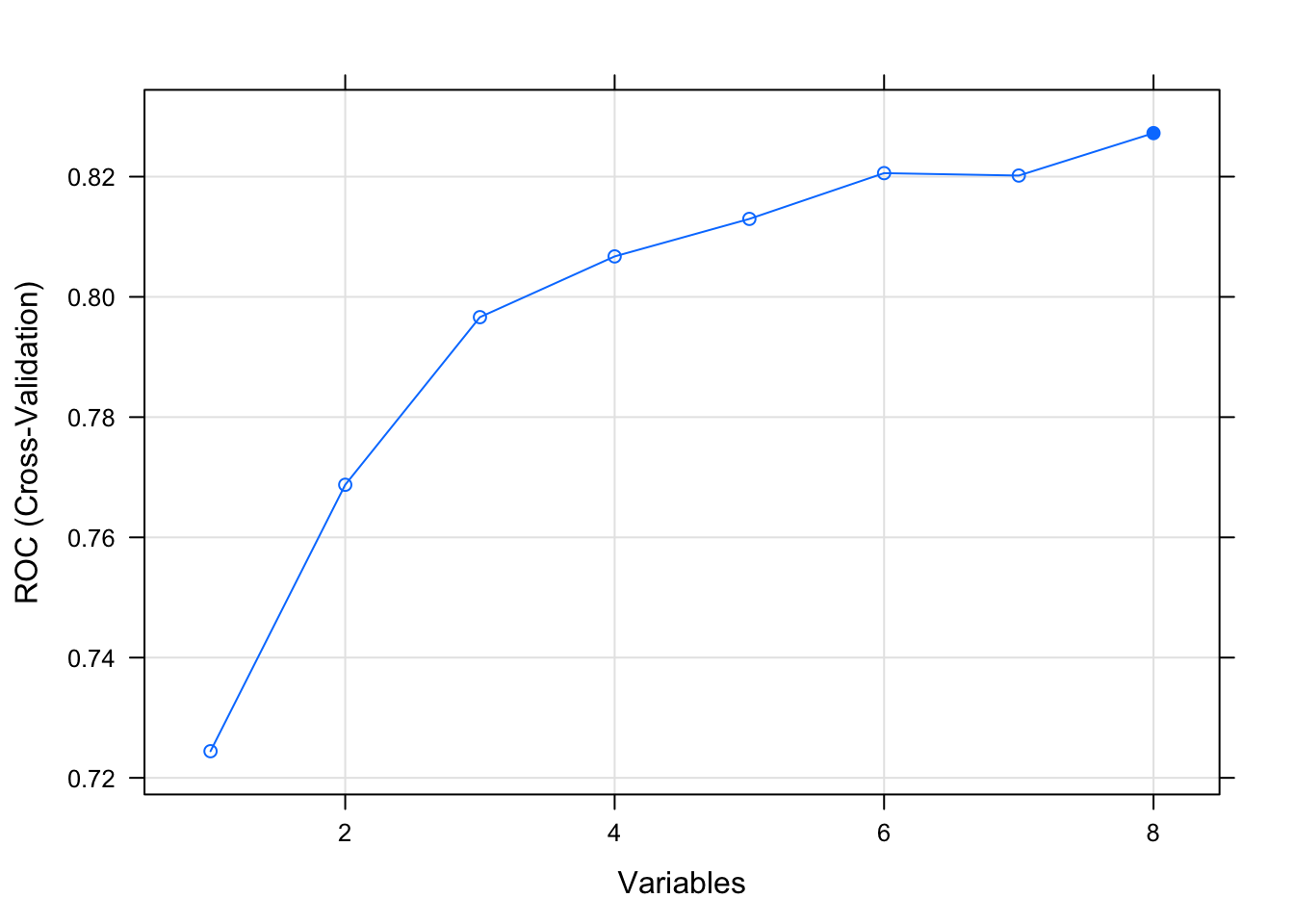

Let’s apply the RFE method on the Pima Indians Diabetes data set. The algorithm is configured to explore all possible subsets of the attributes. All 8 attributes are selected in this example, although in the plot showing the accuracy of the different attribute subset sizes, we can see that just 4 attributes gives almost comparable results

rfFuncs$summary <- twoClassSummary

control <- rfeControl(functions=rfFuncs,

method="cv",

number=4)

# run the RFE algorithm

results <- rfe(pm[,1:8],

pm[,9],

sizes=c(1:8),

rfeControl=control,

metric="ROC")

# summarize the results

print(results)##

## Recursive feature selection

##

## Outer resampling method: Cross-Validated (4 fold)

##

## Resampling performance over subset size:

##

## Variables ROC Sens Spec ROCSD SensSD SpecSD Selected

## 1 0.7244 0.852 0.4179 0.02110 0.008000 0.01219

## 2 0.7687 0.830 0.5224 0.01022 0.035402 0.04222

## 3 0.7966 0.834 0.5522 0.01954 0.036000 0.05170

## 4 0.8067 0.838 0.5746 0.02375 0.007659 0.04478

## 5 0.8130 0.842 0.5410 0.02846 0.030898 0.06716

## 6 0.8206 0.844 0.5784 0.03239 0.034871 0.06139

## 7 0.8202 0.848 0.5448 0.03241 0.041825 0.04640

## 8 0.8272 0.858 0.5821 0.03646 0.030199 0.03656 *

##

## The top 5 variables (out of 8):

## glucose, mass, age, pregnant, pedigree## [1] "glucose" "mass" "age" "pregnant" "pedigree" "insulin" "triceps" "pressure"